Restoring Trust in Large-Scale Digital Assessments: Lessons from the JAMB CBT Glitch

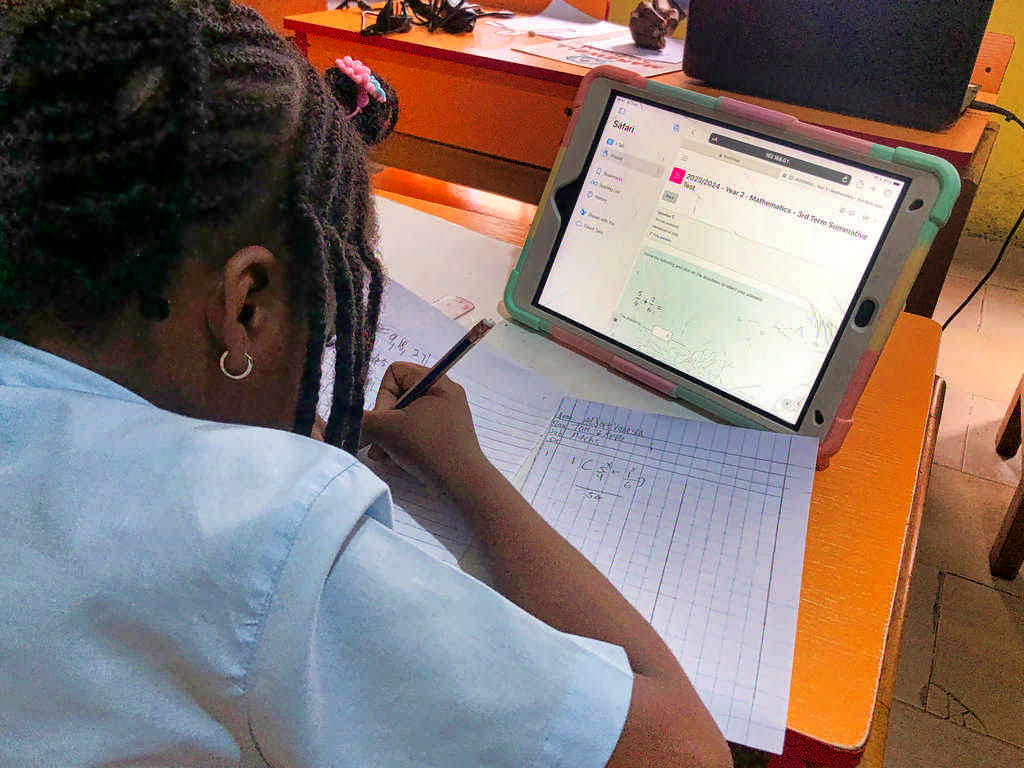

Recently, the Joint Admissions and Matriculation Board (JAMB)—Nigeria’s central body responsible for conducting entrance examinations into tertiary institutions—admitted to technical glitches during the 2024 Unified Tertiary Matriculation Examination (UTME). These glitches, though limited in scope, had disproportionately significant consequences: thousands of candidates scored below the 200-point threshold, sparking widespread concern among candidates, parents, and education stakeholders. At the heart of the issue was a failed software patch that disrupted CBT servers at 157 centres, particularly across Lagos and the South-East region. The malfunction led to inconsistencies in the delivery and marking of the tests, prompting public backlash and accusations of incompetence. In a rare act of transparency, JAMB’s Registrar, Professor Ishaq Oloyede, publicly acknowledged the failure, offered apologies, and accepted full responsibility. The board later rescheduled the affected exams at no additional cost and published updated results. While this remedial step was commendable, the incident raises deeper concerns about the integrity and resilience of national-scale digital examination systems.

With hundreds of distributed centres, a real-time dashboard showing server status, CBT software version, uptime, network latency, and patch history would have flagged the affected 157 centres before candidates ever sat down for their tests.

A Systems Failure Beyond Just Code

What I found particularly troubling was the initial dismissal of stakeholder complaints by JAMB, suggesting that its internal monitoring and reporting infrastructure failed to detect the issues in real-time. The implication is profound: the body responsible for one of the most high-stakes assessments in the country was not immediately aware that its core delivery systems had failed in multiple locations. JAMB has long championed the shift to computer-based testing (CBT), and rightly so. CBTs offer numerous advantages, including efficiency, consistency, scalability, and data analytics for continuous improvement. But the transition from paper to digital is not simply about swapping physical answer sheets for computers. It demands an overhaul in operational oversight, real-time system intelligence, and incident response mechanisms.

The Case for Real-Time Monitoring and Distributed Coordination

From a technical standpoint, this incident could have been mitigated—or even prevented—if a centralised monitoring infrastructure had been in place to track the health and compliance of local CBT servers. With hundreds of distributed centres, a real-time dashboard showing server status, CBT software version, uptime, network latency, and patch history would have flagged the affected 157 centres before candidates ever sat down for their tests. Such a system isn’t futuristic. It’s feasible—and indeed necessary—for national-scale CBT operations. As an EdTech strategist working on CBT solutions for multi-campus school networks, I concur with JAMB’s adoption of a hybrid deployment model: one that combines localized delivery (offline-capable exam servers in centres), and going forward, overhauled with centralized real-time monitoring, compliance checks, and automated patch/version coordination.

Recommendations for Strengthening CBT Administration in Nigeria

To ensure credibility, efficiency, and resilience in future digital examinations, the following steps are critical:

- Centralised Monitoring Infrastructure: Deploy real-time dashboards to track hardware status, server logs, CBT software versions, and error rates across all centres.

- Heartbeat and Compliance Protocols: Each CBT centre should send periodic “heartbeat” signals to a central server to verify readiness and health before and during exams.

- Software Patch Management Framework: A controlled, tested, and versioned patching pipeline should be implemented, complete with rollback capabilities and audit logs.

- Automated Alerting & Escalation System: Any deviation from normal performance should trigger automated alerts to technical teams and stakeholders for swift resolution.

- Independent Oversight and Third-Party Audits: Transparent governance, with external QA audits and post-exam summaries from each centre, can further bolster trust in the system.

- Continuous Training for CBT Staff: All technical and supervisory staff at CBT centres must be trained on updated protocols, contingency handling, and NDPA-compliant data practices.

Toward a Smarter, Trustworthy Future

Technology is not infallible especially when it is reliant on human factor in administration and application as evidenced in the recent incident. Thus its failure must never come as a surprise. The 2024 UTME glitch serves as a wake-up call—not just for JAMB but for every education authority and solution provider in Nigeria's EdTech space. It’s a call to upgrade our operational intelligence, not just our hardware. It’s a call to embed foresight and responsiveness into the very fabric of digital assessments. As we build the next generation of CBT platforms—whether for schools, corporate, ministries, or examination bodies—resilience by design must be our mantra. The stakes are too high, the candidates too many, and the trust too fragile to gamble on hope instead of system-led certainty.

Be the first to comment!